Hi all,

I’ve been extending my copy of CommonFilters and will be submitting it for consideration for 1.9.0 soon.

Current new functionality

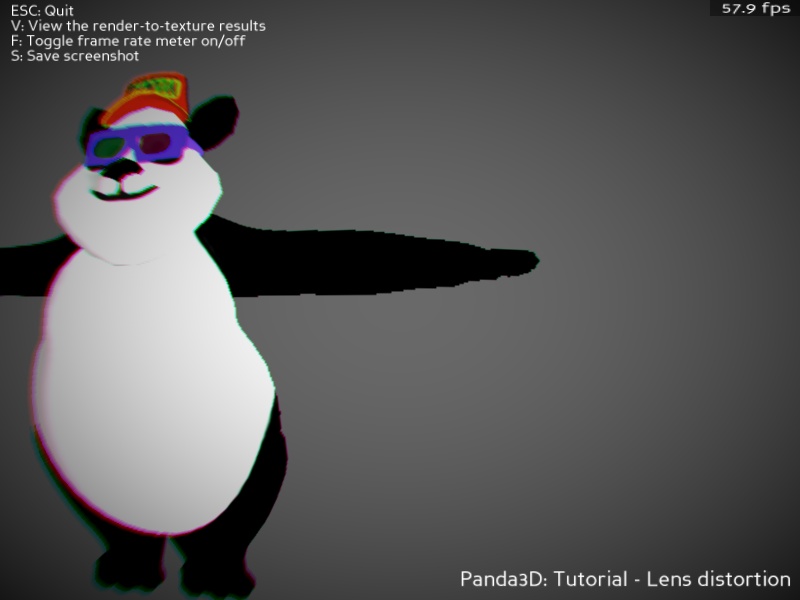

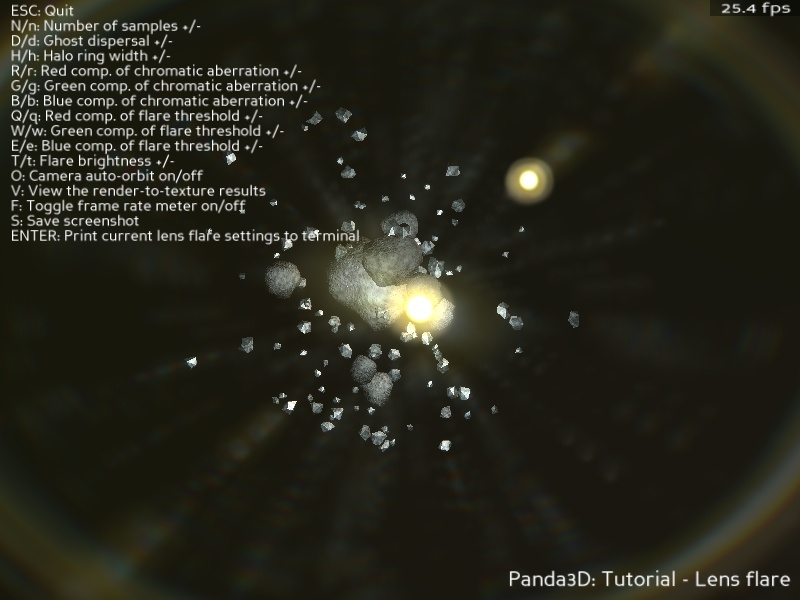

- Lens flare based on the algorithm by John Chapman. The credit goes to ninth (Lens flare postprocess filter); I’ve just cleaned up the code a bit and made it to work together with CommonFilters.

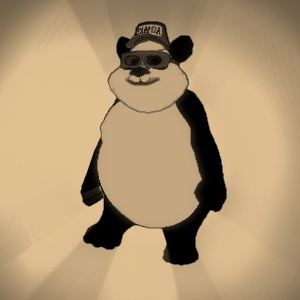

- Desaturation (monochrome) filter with perceptual weighting, and configurable strength, tinting and hue bandpass. Original.

- Scanlines (CRT TV) filter (original). Both static and dynamic modes, configurable strength and which field to process, and the thickness of the desired scanlines (in pixels). Original.

Screenshots below in separate posts.

I’ve also rearranged the sequence in the main filter as follows: CartoonInk > AmbientOcclusion > VolumetricLighting > Bloom > LensFlare > Desaturation > Inverted > BlurSharpen > Scanlines > ViewGlow.

The idea is to imitate the physical process that produces the picture. First we have effects that correspond to optical processes in the scene itself (AO, VL), then effects corresponding to lens imperfections (bloom, LF), then effects corresponding to the behaviour of the film or detector (desat, inv), and finally computer-generated effects that need the complete “photograph” as input (blur, scanlines). The debug filter ViewGlow goes last.

Note that with this ordering, e.g. Desaturation takes into account the chromatic aberration in LensFlare; the wavelength-based aberration occurs in the lens regardless of whether the result is recorded on colour or monochrome film.

In case the scene being rendered is a cartoon, CartoonInk goes first to imitate a completely drawn cel (including the ink outlines) that is then used as input for computer-generated effects. This imitates the production process of anime.

The code changes are unfortunately not completely orthogonal to those made in the cartoon shader improvements patch, so I fear that needs to be processed first. I can, however, provide the current code for review purposes.

The future of CommonFilters?

I think there are still several filters that are “common” enough - in the sense of generally applicable - that it would be interesting to include them into CommonFilters (probably after 1.9.0 is released). Specifically, I’m thinking of:

- Screen-space local reflections (SSLR). A Panda implementation has already been made by ninth (Screen Space Local Reflections v2), so pending his permission, I could add this one next.

- Fast approximate antialiasing (FXAA). In my opinion, fast fullscreen antialiasing to remove jagged edges would be a killer feature to have.

As for FXAA, there have already been some attempts to create this shader for Panda. In the forums there are at least two versions floating around (one written in Cg, the other in GLSL). But I’m not sure how to obtain the necessary permissions. At least by a quick look at the code, it seems the existing implementations are heavily based on, if not selectively copy and pasted, from NVIDIA’s original by Timothy Lottes, and the original header file says “all rights reserved”. On the other hand it’s publicly available in NVIDIA’s SDK, as is the whitepaper documenting the algorithm. There is another version of the code at Geeks3D (based on the initial version of the algorithm), but that looks very similar, and I could not find any information on the license. I think I need someone more experienced to help in getting the legal matters right - once that’s done, I can then do the necessary coding

Another important issue is the architecture of CommonFilters. As is said in the comment at the start of the file (already in 1.8.1), it’s monolithic and clunky. I’ve been playing around with the idea that instead of one Monolithic Shader of Doom ™, we could have several “stages” that would form a postprocessing pipeline. Multipassing is in any case required to apply blur correctly (i.e. such that it sees the output from the other postprocessing filters) - this is something that’s bugging me, so I’d like to fix it one way or another.

On the other hand, a pipeline is bureaucratic to set up, and reduces performance for those filters that could be applied in a single pass using a monolithic shader. It could be designed to create one monolithic shader per stage, based on some kind of priority system when the shaders are registered to the pipeline, but that’s starting to sound pretty complicated - I’m not sure whether it would solve problems or just create more.

One should of course keep in mind the performance aspect - most of the effects in CommonFilters are such that they can be applied in a single pass using a monolithic shader, so maybe that part of the design should be kept afterall.

For example, even though SSAO uses intermediate stages, the result only modulates the output, so e.g. cartoon outlines will remain. Volumetric lighting is additive, so it preserves whatever has been added by the other filters. (The normal use case is to render it in a separate occlusion pass anyway, but its placement in the filter sequence may matter if someone uses it as a type of additive radial blur, such as in Problem with volumetric lighting .)

Bloom doesn’t see cartoon outlines, but that doesn’t matter much - the bloom map is half resolution anyway, and the look of the bloom effect is such that it doesn’t produce visible artifacts even if it gets blended onto the ink. The same applies to the lens flare.

The most obvious offender is blur, since at higher blend strengths (which are useful e.g. when a game is paused and a menu opened) it will erase the processing from the other postprocessing filters.

If the monolithic design is kept, I think blur should be special-cased so that if blur is enabled, the rest of the effects render into an interQuad, and then only blur is applied to the finalQuad, using the interQuad as input. Otherwise (when blur disabled) all effects render onto finalQuad, like in the current version.

As for the clunkiness, I think it would be useful to define each effect in a separate Python module that provides code generators for the vshader and fshader. This would remove the need to have all the shader code pasted at the top of CommonFilters.py.

The reason to prefer Python modules instead of .sha files is, of course, the “Radeon curse” - the support of AMD cards in Cg is pretty much limited to the arbvp1 and arbfp1 profiles, which do not support e.g. variable-length loops. Hence, to have compile-time configurable-length loops, we need a shader generator that hardcodes the configuration parameter when the shader is created. This solution is already in use in CommonFilters, but the architecture would become much cleaner if it was split into several modules.

One further thing that is currently bugging me is that CommonFilters, and FilterManager, only make it possible to capture a window (or buffer) that has a camera. For maximum flexibility considering daisy-chaining of filters, it would be nice if FilterManager could capture a texture buffer. This can be done manually (as I did for the god rays with cartoon shading [Sample program] God rays with cartoon-shaded objects), but this is the sort of thing that really, really needs a convenience function.

The last idea would play especially well with user-side pipelining. Several instances of CommonFilters could be created to process the same scene (later instances using the output from the previous stage as their input), and the user would control which effects to enable at each stage. This would also allow mixing custom filters (added using FilterManager) with CommonFilters in the same pipeline. At least to me this sounds clean and simple.

Thoughts anyone?

Thanks.

Thanks.